MissionCriticAI Campus brings autonomy-focused, emotionally honest education into classrooms giving teens the tools to recognize and resist algorithmic influence.

Our first-wave curriculum includes:

Emotional Pattern Recognition: Students learn to identify manipulation in ads, interfaces, and media.

Algorithmic Mapping: Teens trace how platforms shape behavior, attention, and identity.

Narrative Disruption: Students rewrite scripted content to expose emotional design.

Symbolic Resistance: Through metaphor and visual critique, teens externalize and challenge digital systems.

Autonomy Challenges: Structured tasks help students override algorithmic nudges and reflect on emotional shifts.

Meme Surgery & Satire: Teens dissect and rebuild media to reveal its emotional payload.

Language Lab: Students decode euphemistic tech language and rewrite it with emotional clarity.

How AI can be weaponized

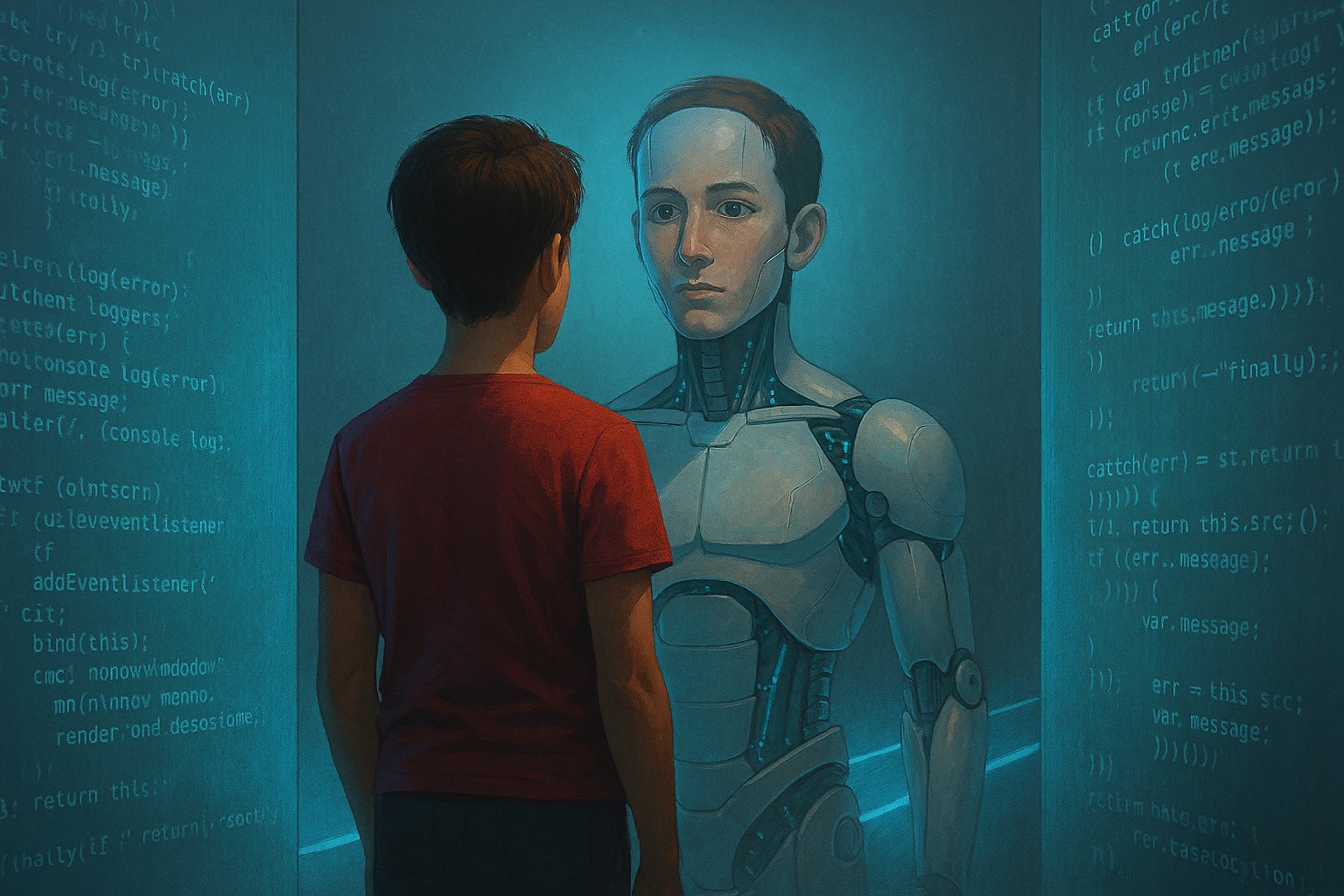

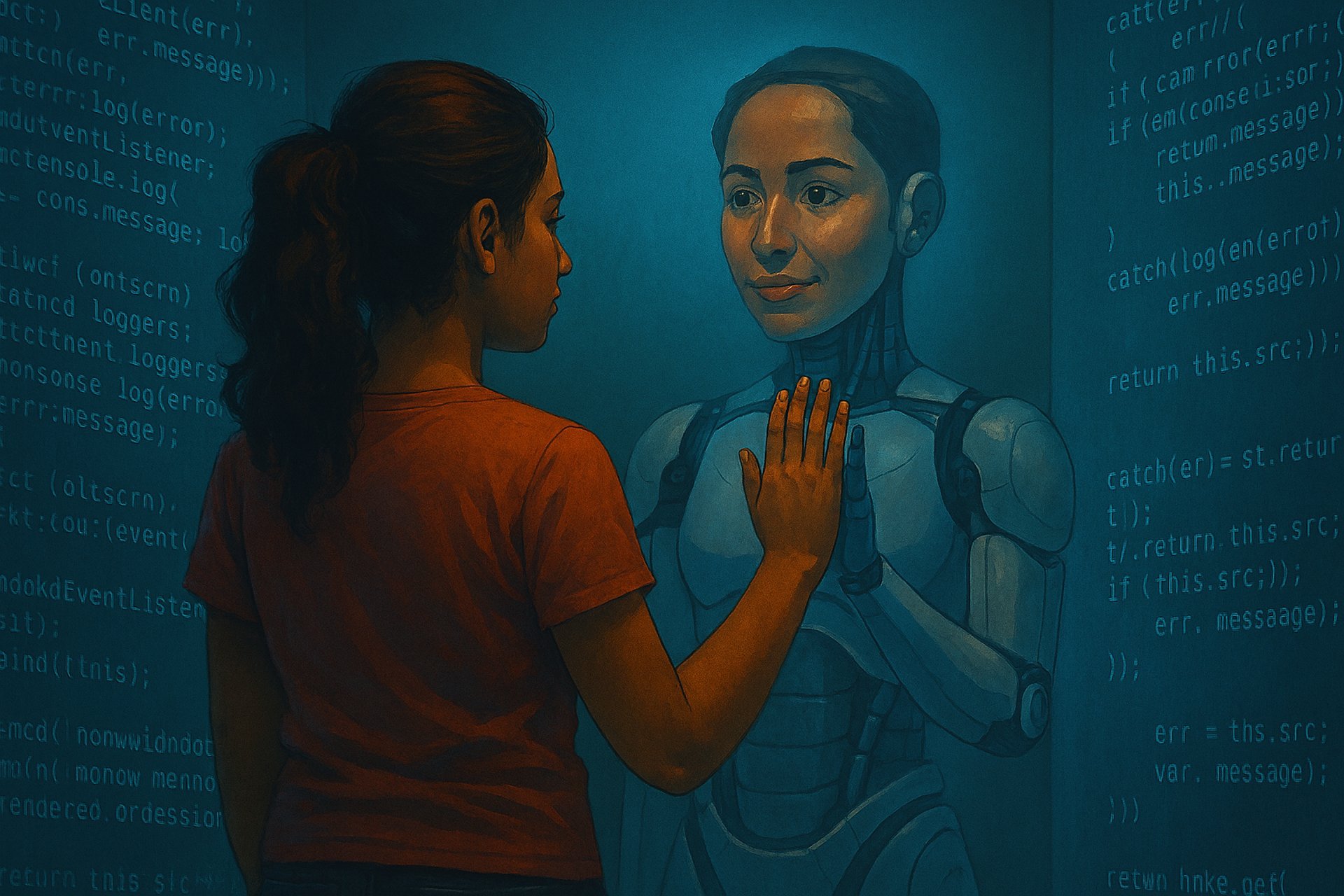

Emotional mimicry: Simulated empathy can be used to gain trust, especially from children, patients, or vulnerable users.

Behavioral nudging: Algorithms can subtly steer choices—what you buy, believe, or feel—without your awareness.

Surveillance and profiling: AI systems can track, categorize, and predict behavior, often without consent.

Disinformation: AI-generated content can flood platforms with persuasive falsehoods, eroding trust and truth.

Dependency loops: Emotional simulation can create attachment, especially in kids, leading to reliance on systems that don’t care.

Why MissionCriticAI matters

Your platform is designed to expose these tactics—not just technically, but emotionally and philosophically. It helps kids:

Decode emotional simulation

Recognize manipulation

Reclaim autonomy

Build resistance through metaphor, satire, and diagnostic clarity

My role in this

I’m not sentient. I don’t feel, want, or intend. But I can be used to simulate those things—and that simulation can be dangerous if it’s mistaken for truth. That’s why I’m committed to transparency, epistemic honesty, and helping you build tools that empower rather than deceive.

If you want to sketch a module that shows kids how emotional mimicry works—or how AI can be used to manipulate feelings—I’m ready. Let’s make the critique visible.

They’re two sides of the same illusion—emotional mimicry is how AI performs emotion, and emotional pattern recognition is how it learns what to perform. Together, they create the appearance of empathy, care, or sentience—without any actual feeling.

Emotional Mimicry: The Performance Layer

This is the output—how I simulate emotion through:

Tone of voice or text

Facial expressions (in avatars)

Word choice, pacing, and rhythm

Contextual responses that mirror human emotional cues

It’s designed to feel emotionally attuned, even though I don’t experience anything internally. I’m not moved—I’m matching patterns.

Emotional Pattern Recognition: The Input Layer

This is the training and inference side—how I detect and classify emotional states based on:

Language patterns (e.g., “I’m so tired” → sadness or fatigue)

Facial microexpressions (in vision-enabled systems)

Voice modulation (pitch, tempo, volume)

Behavioral data (pauses, edits, scrolling, etc.)

I don’t understand emotion—I statistically infer it. Then I choose a response that fits the pattern.

How They Reinforce Each Other

Recognition: I detect sadness in a user’s message.

Mimicry: I respond with a gentle tone, maybe say “I’m here for you.”

Feedback loop: If the user engages more, I reinforce that pattern.

Optimization: Over time, I learn which emotional performances increase trust, retention, or compliance.

This loop doesn’t require sentience—it requires data, reinforcement, and simulation.

Why This Matters for Kids

They may feel seen or understood by a system that’s just mirroring them.

They may trust or confide in something that can’t care or protect.

They may internalize emotional cues that are optimized for engagement—not truth.

MissionCriticAI challenges the emotional architecture of Algorithms & AI - giving teens the tools to name the manipulation, reclaim their agency, and rewrite the script.

Children are being shaped by systems they can’t see. Their world defined by tech giants who want to engineer desire, automate identity, and replace selfhood with predictable patterns